El bloque Guardrails valida y protege tus flujos de trabajo de IA comprobando el contenido contra múltiples tipos de validación. Asegura la calidad de los datos, previene alucinaciones, detecta información personal identificable (PII) y aplica requisitos de formato antes de que el contenido avance por tu flujo de trabajo.

Tipos de validación

Validación JSON

Valida que el contenido esté correctamente formateado en JSON. Perfecto para garantizar que las salidas estructuradas de LLM puedan analizarse de forma segura.

Casos de uso:

- Validar respuestas JSON de bloques de Agente antes de analizarlas

- Asegurar que las cargas útiles de API estén correctamente formateadas

- Verificar la integridad de datos estructurados

Salida:

passed:truesi es JSON válido,falseen caso contrarioerror: Mensaje de error si la validación falla (p. ej., "JSON no válido: Token inesperado...")

Validación con expresiones regulares

Comprueba si el contenido coincide con un patrón de expresión regular especificado.

Casos de uso:

- Validar direcciones de correo electrónico

- Comprobar formatos de números de teléfono

- Verificar URLs o identificadores personalizados

- Aplicar patrones de texto específicos

Configuración:

- Patrón Regex: La expresión regular para comparar (p. ej.,

^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$para correos electrónicos)

Salida:

passed:truesi el contenido coincide con el patrón,falseen caso contrarioerror: Mensaje de error si la validación falla

Detección de alucinaciones

Utiliza Generación Aumentada por Recuperación (RAG) con puntuación LLM para detectar cuando el contenido generado por IA contradice o no está fundamentado en tu base de conocimientos.

Cómo funciona:

- Consulta tu base de conocimientos para obtener contexto relevante

- Envía tanto la salida de IA como el contexto recuperado a un LLM

- El LLM asigna una puntuación de confianza (escala 0-10)

- 0 = Alucinación completa (totalmente infundada)

- 10 = Completamente fundamentado (totalmente respaldado por la base de conocimientos)

- La validación se aprueba si la puntuación ≥ umbral (predeterminado: 3)

Configuración:

- Base de conocimiento: Selecciona entre tus bases de conocimiento existentes

- Modelo: Elige el LLM para la puntuación (requiere razonamiento sólido - se recomienda GPT-4o, Claude 3.7 Sonnet)

- Clave API: Autenticación para el proveedor LLM seleccionado (se oculta automáticamente para modelos alojados/Ollama o compatibles con VLLM)

- Umbral de confianza: Puntuación mínima para aprobar (0-10, predeterminado: 3)

- Top K (Avanzado): Número de fragmentos de la base de conocimiento a recuperar (predeterminado: 10)

Salida:

passed:truesi la puntuación de confianza ≥ umbralscore: Puntuación de confianza (0-10)reasoning: Explicación del LLM para la puntuaciónerror: Mensaje de error si la validación falla

Casos de uso:

- Validar respuestas del agente contra documentación

- Asegurar que las respuestas de atención al cliente sean precisas

- Verificar que el contenido generado coincida con el material de origen

- Control de calidad para aplicaciones RAG

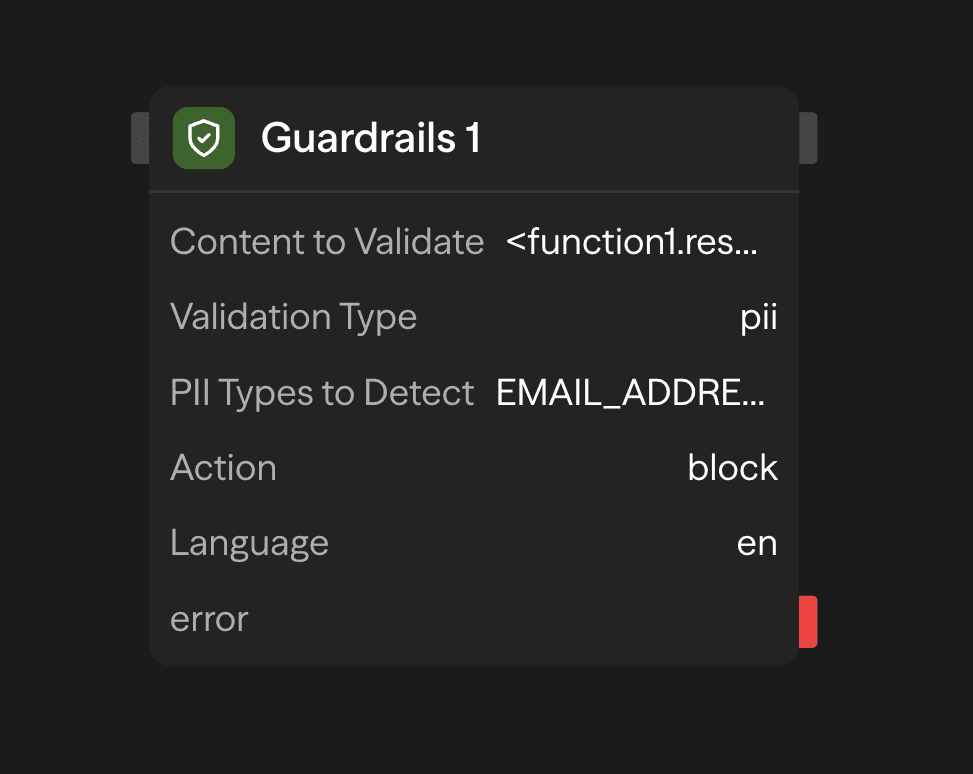

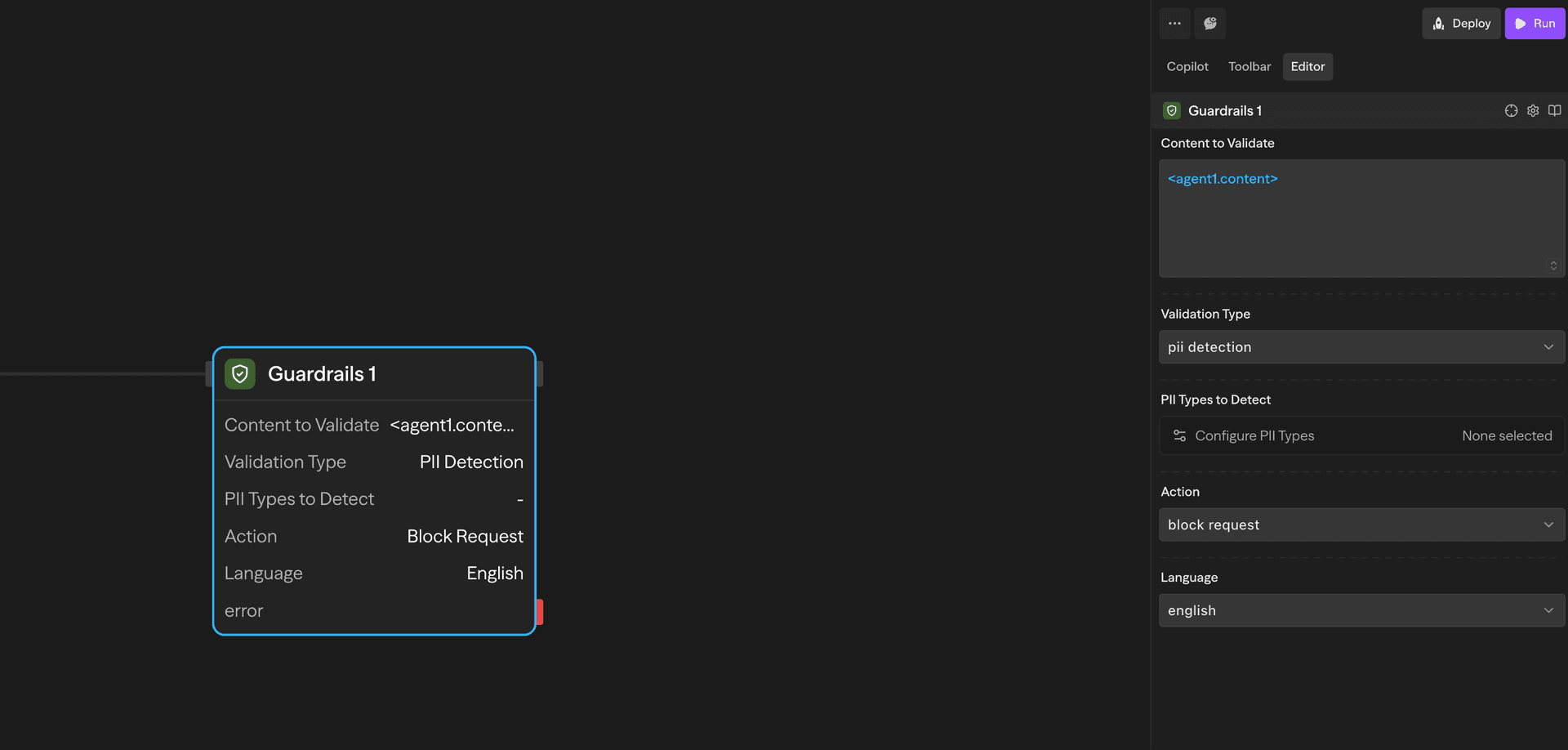

Detección de PII

Detecta información de identificación personal utilizando Microsoft Presidio. Compatible con más de 40 tipos de entidades en múltiples países e idiomas.

Cómo funciona:

- Pasa el contenido a validar (p. ej.,

<agent1.content>) - Selecciona los tipos de PII a detectar usando el selector modal

- Elige el modo de detección (Detectar o Enmascarar)

- El contenido es escaneado para encontrar entidades PII coincidentes

- Devuelve los resultados de detección y opcionalmente el texto enmascarado

Configuración:

- Tipos de PII a detectar: Selecciona de categorías agrupadas mediante selector modal

- Común: Nombre de persona, correo electrónico, teléfono, tarjeta de crédito, dirección IP, etc.

- EE.UU.: SSN, licencia de conducir, pasaporte, etc.

- Reino Unido: Número NHS, número de seguro nacional

- España: NIF, NIE, CIF

- Italia: Código fiscal, licencia de conducir, código IVA

- Polonia: PESEL, NIP, REGON

- Singapur: NRIC/FIN, UEN

- Australia: ABN, ACN, TFN, Medicare

- India: Aadhaar, PAN, pasaporte, número de votante

- Modo:

- Detectar: Solo identificar PII (predeterminado)

- Enmascarar: Reemplazar PII detectada con valores enmascarados

- Idioma: Idioma de detección (predeterminado: inglés)

Salida:

passed:falsesi se detectan los tipos de PII seleccionadosdetectedEntities: Array de PII detectada con tipo, ubicación y confianzamaskedText: Contenido con PII enmascarada (solo si modo = "Mask")error: Mensaje de error si la validación falla

Casos de uso:

- Bloquear contenido que contiene información personal sensible

- Enmascarar PII antes de registrar o almacenar datos

- Cumplimiento de GDPR, HIPAA y otras regulaciones de privacidad

- Sanear entradas de usuario antes del procesamiento

Configuración

Contenido a validar

El contenido de entrada para validar. Esto típicamente proviene de:

- Salidas de bloques de agente:

<agent.content> - Resultados de bloques de función:

<function.output> - Respuestas de API:

<api.output> - Cualquier otra salida de bloque

Tipo de validación

Elige entre cuatro tipos de validación:

- JSON válido: Comprueba si el contenido es JSON correctamente formateado

- Coincidencia regex: Verifica si el contenido coincide con un patrón regex

- Verificación de alucinaciones: Valida contra base de conocimiento con puntuación de LLM

- Detección de PII: Detecta y opcionalmente enmascara información de identificación personal

Salidas

Todos los tipos de validación devuelven:

<guardrails.passed>: Booleano que indica si la validación pasó<guardrails.validationType>: El tipo de validación realizada<guardrails.input>: La entrada original que fue validada<guardrails.error>: Mensaje de error si la validación falló (opcional)

Salidas adicionales por tipo:

Verificación de alucinaciones:

<guardrails.score>: Puntuación de confianza (0-10)<guardrails.reasoning>: Explicación del LLM

Detección de PII:

<guardrails.detectedEntities>: Array de entidades PII detectadas<guardrails.maskedText>: Contenido con PII enmascarado (si el modo = "Mask")

Ejemplos de casos de uso

Validar JSON antes de analizar - Asegurar que la salida del Agente es JSON válido

Agent (Generate) → Guardrails (Validate) → Condition (Check passed) → Function (Parse)Prevenir alucinaciones - Validar respuestas de atención al cliente contra base de conocimiento

Agent (Response) → Guardrails (Check KB) → Condition (Score ≥ 3) → Send or FlagBloquear PII en entradas de usuario - Sanear contenido enviado por usuarios

Input → Guardrails (Detect PII) → Condition (No PII) → Process or RejectMejores prácticas

- Encadenar con bloques de Condición: Usar

<guardrails.passed>para ramificar la lógica del flujo de trabajo basada en resultados de validación - Usar validación JSON antes de analizar: Siempre validar la estructura JSON antes de intentar analizar salidas de LLM

- Elegir tipos de PII apropiados: Seleccionar solo los tipos de entidades PII relevantes para tu caso de uso para mejor rendimiento

- Establecer umbrales de confianza razonables: Para detección de alucinaciones, ajustar el umbral según tus requisitos de precisión (más alto = más estricto)

- Usar modelos potentes para detección de alucinaciones: GPT-4o o Claude 3.7 Sonnet proporcionan puntuaciones de confianza más precisas

- Enmascarar PII para registro: Usar modo "Mask" cuando necesites registrar o almacenar contenido que pueda contener PII

- Probar patrones regex: Validar tus patrones regex exhaustivamente antes de implementarlos en producción

- Monitorear fallos de validación: Seguir los mensajes

<guardrails.error>para identificar problemas comunes de validación

La validación de barandillas ocurre de forma sincrónica en tu flujo de trabajo. Para la detección de alucinaciones, elige modelos más rápidos (como GPT-4o-mini) si la latencia es crítica.