The Guardrails block validates and protects your AI workflows by checking content against multiple validation types. Ensure data quality, prevent hallucinations, detect PII, and enforce format requirements before content moves through your workflow.

Validation Types

JSON Validation

Validates that content is properly formatted JSON. Perfect for ensuring structured LLM outputs can be safely parsed.

Use Cases:

- Validate JSON responses from Agent blocks before parsing

- Ensure API payloads are properly formatted

- Check structured data integrity

Output:

passed:trueif valid JSON,falseotherwiseerror: Error message if validation fails (e.g., "Invalid JSON: Unexpected token...")

Regex Validation

Checks if content matches a specified regular expression pattern.

Use Cases:

- Validate email addresses

- Check phone number formats

- Verify URLs or custom identifiers

- Enforce specific text patterns

Configuration:

- Regex Pattern: The regular expression to match against (e.g.,

^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$for emails)

Output:

passed:trueif content matches pattern,falseotherwiseerror: Error message if validation fails

Hallucination Detection

Uses Retrieval-Augmented Generation (RAG) with LLM scoring to detect when AI-generated content contradicts or isn't grounded in your knowledge base.

How It Works:

- Queries your knowledge base for relevant context

- Sends both the AI output and retrieved context to an LLM

- LLM assigns a confidence score (0-10 scale)

- 0 = Full hallucination (completely ungrounded)

- 10 = Fully grounded (completely supported by knowledge base)

- Validation passes if score ≥ threshold (default: 3)

Configuration:

- Knowledge Base: Select from your existing knowledge bases

- Model: Choose LLM for scoring (requires strong reasoning - GPT-4o, Claude 3.7 Sonnet recommended)

- API Key: Authentication for selected LLM provider (auto-hidden for hosted/Ollama or VLLM compatible models)

- Confidence Threshold: Minimum score to pass (0-10, default: 3)

- Top K (Advanced): Number of knowledge base chunks to retrieve (default: 10)

Output:

passed:trueif confidence score ≥ thresholdscore: Confidence score (0-10)reasoning: LLM's explanation for the scoreerror: Error message if validation fails

Use Cases:

- Validate Agent responses against documentation

- Ensure customer support answers are factually accurate

- Verify generated content matches source material

- Quality control for RAG applications

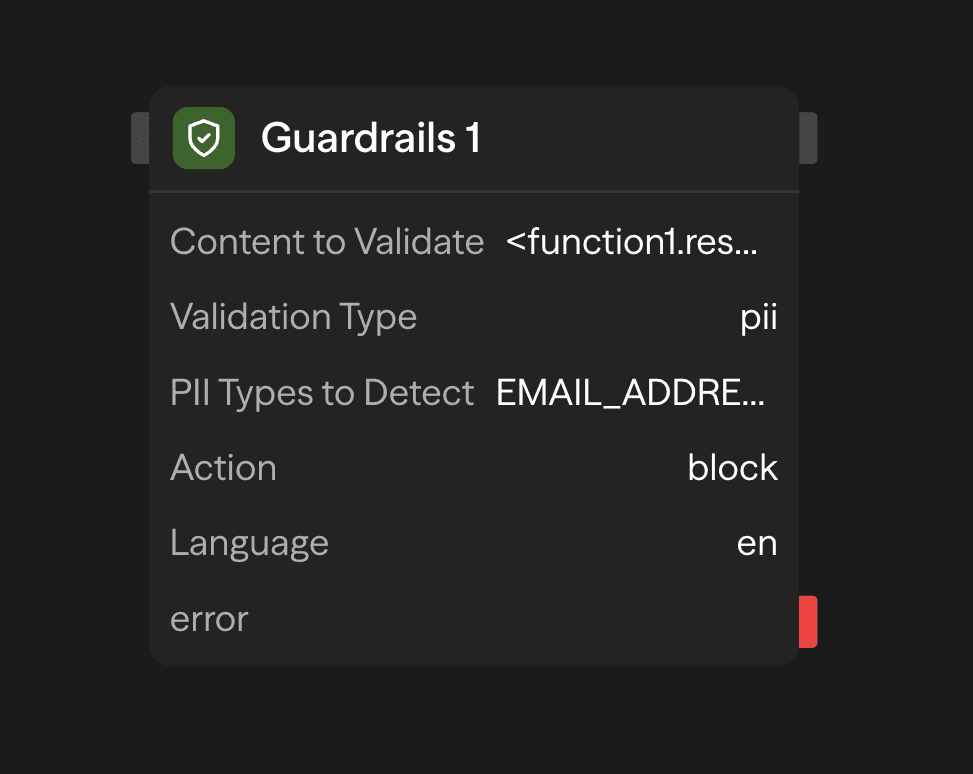

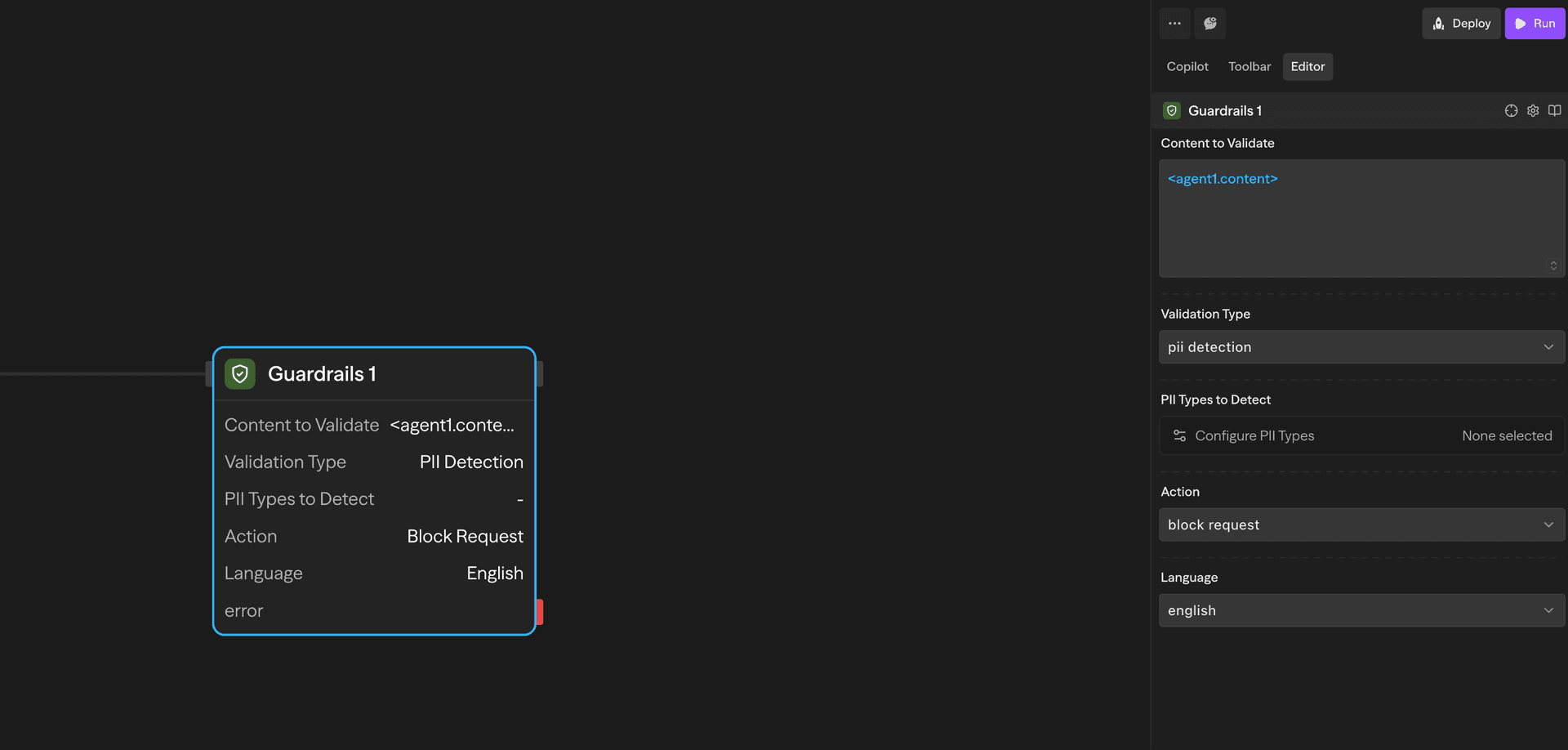

PII Detection

Detects personally identifiable information using Microsoft Presidio. Supports 40+ entity types across multiple countries and languages.

How It Works:

- Pass content to validate (e.g.,

<agent1.content>) - Select PII types to detect using the modal selector

- Choose detection mode (Detect or Mask)

- Content is scanned for matching PII entities

- Returns detection results and optionally masked text

Configuration:

- PII Types to Detect: Select from grouped categories via modal selector

- Common: Person name, Email, Phone, Credit card, IP address, etc.

- USA: SSN, Driver's license, Passport, etc.

- UK: NHS number, National insurance number

- Spain: NIF, NIE, CIF

- Italy: Fiscal code, Driver's license, VAT code

- Poland: PESEL, NIP, REGON

- Singapore: NRIC/FIN, UEN

- Australia: ABN, ACN, TFN, Medicare

- India: Aadhaar, PAN, Passport, Voter number

- Mode:

- Detect: Only identify PII (default)

- Mask: Replace detected PII with masked values

- Language: Detection language (default: English)

Output:

passed:falseif any selected PII types are detecteddetectedEntities: Array of detected PII with type, location, and confidencemaskedText: Content with PII masked (only if mode = "Mask")error: Error message if validation fails

Use Cases:

- Block content containing sensitive personal information

- Mask PII before logging or storing data

- Compliance with GDPR, HIPAA, and other privacy regulations

- Sanitize user inputs before processing

Configuration

Content to Validate

The input content to validate. This typically comes from:

- Agent block outputs:

<agent.content> - Function block results:

<function.output> - API responses:

<api.output> - Any other block output

Validation Type

Choose from four validation types:

- Valid JSON: Check if content is properly formatted JSON

- Regex Match: Verify content matches a regex pattern

- Hallucination Check: Validate against knowledge base with LLM scoring

- PII Detection: Detect and optionally mask personally identifiable information

Outputs

All validation types return:

<guardrails.passed>: Boolean indicating if validation passed<guardrails.validationType>: The type of validation performed<guardrails.input>: The original input that was validated<guardrails.error>: Error message if validation failed (optional)

Additional outputs by type:

Hallucination Check:

<guardrails.score>: Confidence score (0-10)<guardrails.reasoning>: LLM's explanation

PII Detection:

<guardrails.detectedEntities>: Array of detected PII entities<guardrails.maskedText>: Content with PII masked (if mode = "Mask")

Example Use Cases

Validate JSON Before Parsing - Ensure Agent output is valid JSON

Agent (Generate) → Guardrails (Validate) → Condition (Check passed) → Function (Parse)Prevent Hallucinations - Validate customer support responses against knowledge base

Agent (Response) → Guardrails (Check KB) → Condition (Score ≥ 3) → Send or FlagBlock PII in User Inputs - Sanitize user-submitted content

Input → Guardrails (Detect PII) → Condition (No PII) → Process or RejectBest Practices

- Chain with Condition blocks: Use

<guardrails.passed>to branch workflow logic based on validation results - Use JSON validation before parsing: Always validate JSON structure before attempting to parse LLM outputs

- Choose appropriate PII types: Only select the PII entity types relevant to your use case for better performance

- Set reasonable confidence thresholds: For hallucination detection, adjust threshold based on your accuracy requirements (higher = stricter)

- Use strong models for hallucination detection: GPT-4o or Claude 3.7 Sonnet provide more accurate confidence scoring

- Mask PII for logging: Use "Mask" mode when you need to log or store content that may contain PII

- Test regex patterns: Validate your regex patterns thoroughly before deploying to production

- Monitor validation failures: Track

<guardrails.error>messages to identify common validation issues

Guardrails validation happens synchronously in your workflow. For hallucination detection, choose faster models (like GPT-4o-mini) if latency is critical.