Der Guardrails-Block validiert und schützt Ihre KI-Workflows, indem er Inhalte anhand mehrerer Validierungstypen überprüft. Stellen Sie die Datenqualität sicher, verhindern Sie Halluzinationen, erkennen Sie personenbezogene Daten und erzwingen Sie Formatanforderungen, bevor Inhalte durch Ihren Workflow fließen.

Validierungstypen

JSON-Validierung

Überprüft, ob der Inhalt korrekt formatiertes JSON ist. Perfekt, um sicherzustellen, dass strukturierte LLM-Ausgaben sicher geparst werden können.

Anwendungsfälle:

- Validierung von JSON-Antworten aus Agent-Blöcken vor dem Parsen

- Sicherstellen, dass API-Payloads korrekt formatiert sind

- Überprüfung der Integrität strukturierter Daten

Ausgabe:

passed:truebei gültigem JSON, sonstfalseerror: Fehlermeldung bei fehlgeschlagener Validierung (z.B. "Ungültiges JSON: Unerwartetes Token...")

Regex-Validierung

Prüft, ob der Inhalt einem bestimmten regulären Ausdrucksmuster entspricht.

Anwendungsfälle:

- Validierung von E-Mail-Adressen

- Überprüfung von Telefonnummernformaten

- Verifizierung von URLs oder benutzerdefinierten Kennungen

- Durchsetzung spezifischer Textmuster

Konfiguration:

- Regex-Muster: Der reguläre Ausdruck, gegen den geprüft wird (z.B.

^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$für E-Mails)

Ausgabe:

passed:truewenn der Inhalt dem Muster entspricht, sonstfalseerror: Fehlermeldung bei fehlgeschlagener Validierung

Halluzinationserkennung

Verwendet Retrieval-Augmented Generation (RAG) mit LLM-Bewertung, um zu erkennen, wann KI-generierte Inhalte im Widerspruch zu Ihrer Wissensdatenbank stehen oder nicht darin begründet sind.

Funktionsweise:

- Abfrage Ihrer Wissensdatenbank nach relevantem Kontext

- Übermittlung sowohl der KI-Ausgabe als auch des abgerufenen Kontexts an ein LLM

- LLM weist einen Konfidenzwert zu (Skala 0-10)

- 0 = Vollständige Halluzination (völlig unbegründet)

- 10 = Vollständig begründet (komplett durch die Wissensdatenbank gestützt)

- Validierung besteht, wenn der Wert ≥ Schwellenwert ist (Standard: 3)

Konfiguration:

- Wissensdatenbank: Wählen Sie aus Ihren vorhandenen Wissensdatenbanken

- Modell: Wählen Sie LLM für die Bewertung (erfordert starkes Denkvermögen - GPT-4o, Claude 3.7 Sonnet empfohlen)

- API-Schlüssel: Authentifizierung für den ausgewählten LLM-Anbieter (automatisch ausgeblendet für gehostete/Ollama oder VLLM-kompatible Modelle)

- Vertrauensschwelle: Mindestpunktzahl zum Bestehen (0-10, Standard: 3)

- Top K (Erweitert): Anzahl der abzurufenden Wissensdatenbank-Chunks (Standard: 10)

Ausgabe:

passed:truewenn Konfidenzwert ≥ Schwellenwertscore: Konfidenzwert (0-10)reasoning: Erklärung des LLM für den Werterror: Fehlermeldung bei fehlgeschlagener Validierung

Anwendungsfälle:

- Validierung von Agent-Antworten anhand der Dokumentation

- Sicherstellung der faktischen Richtigkeit von Kundendienstantworten

- Überprüfung, ob generierte Inhalte mit dem Quellmaterial übereinstimmen

- Qualitätskontrolle für RAG-Anwendungen

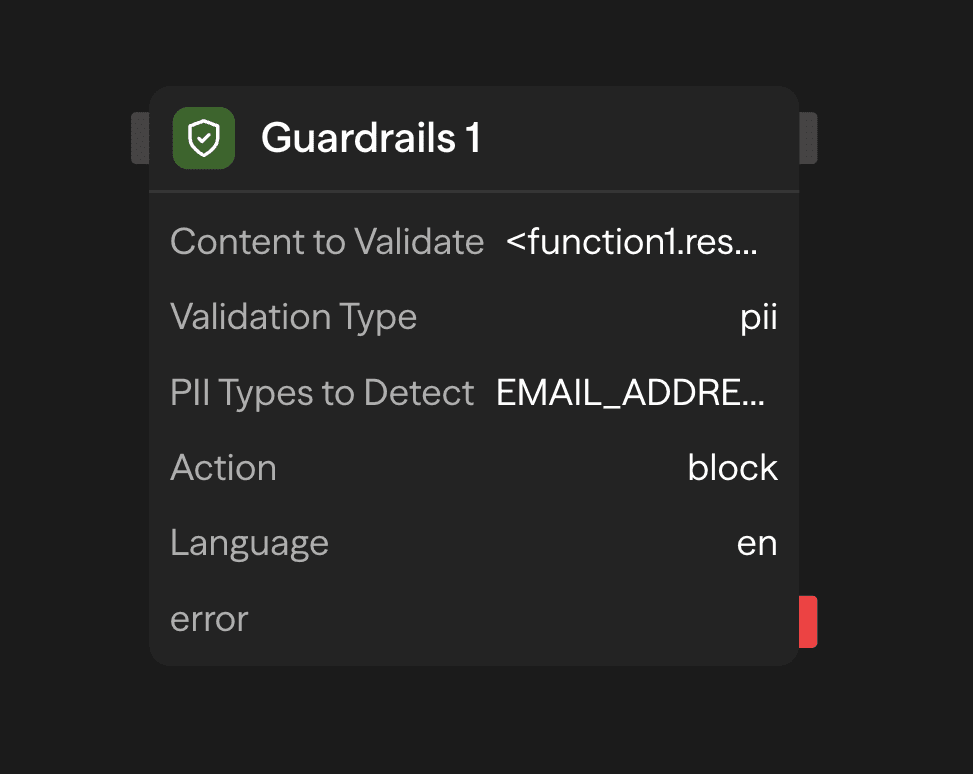

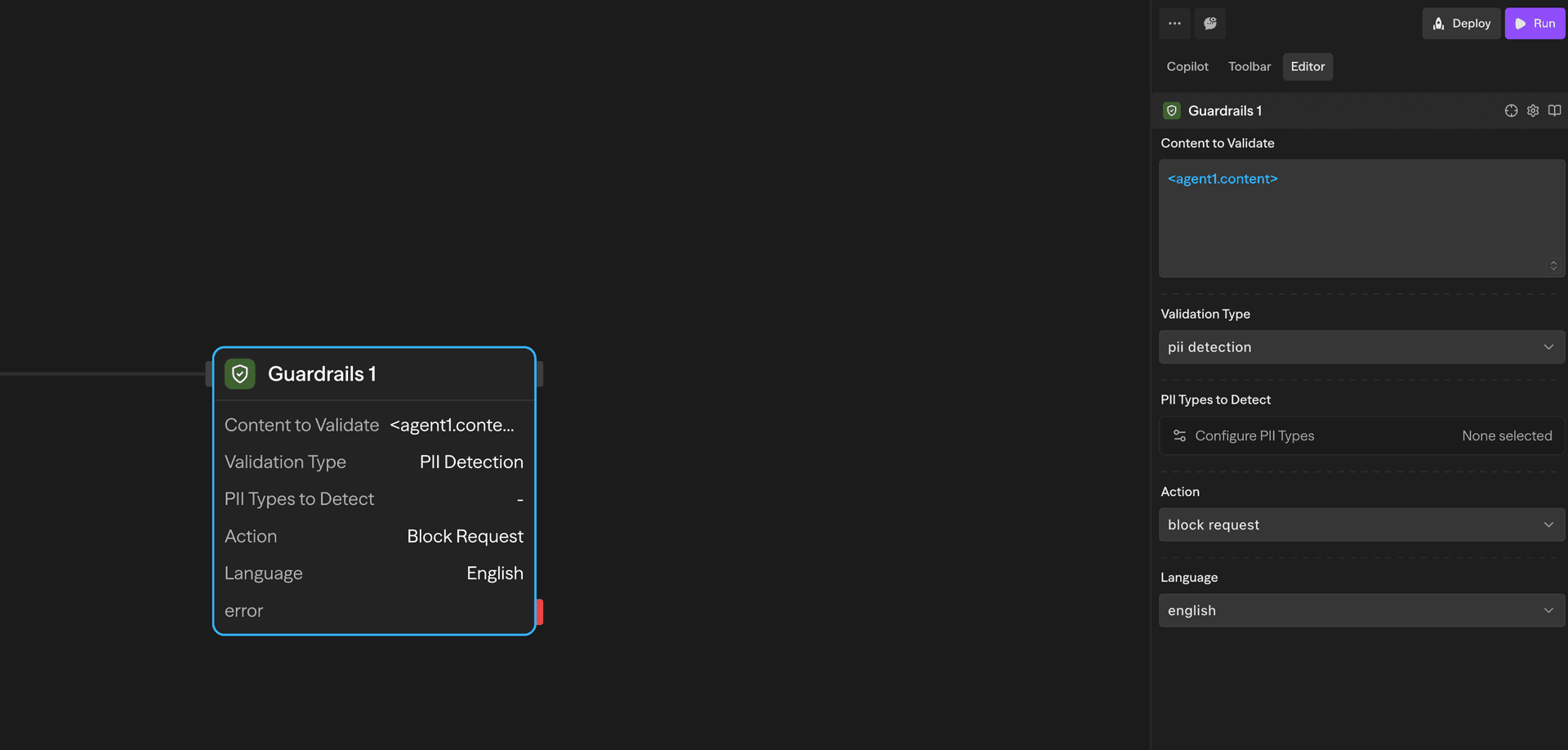

PII-Erkennung

Erkennt personenbezogene Daten mithilfe von Microsoft Presidio. Unterstützt über 40 Entitätstypen in mehreren Ländern und Sprachen.

Funktionsweise:

- Übergabe des zu validierenden Inhalts (z.B.

<agent1.content>) - Auswahl der zu erkennenden PII-Typen über den Modal-Selektor

- Auswahl des Erkennungsmodus (Erkennen oder Maskieren)

- Inhalt wird auf übereinstimmende PII-Entitäten gescannt

- Gibt Erkennungsergebnisse und optional maskierten Text zurück

Konfiguration:

- Zu erkennende PII-Typen: Auswahl aus gruppierten Kategorien über Modal-Selektor

- Allgemein: Personenname, E-Mail, Telefon, Kreditkarte, IP-Adresse usw.

- USA: Sozialversicherungsnummer, Führerschein, Reisepass usw.

- UK: NHS-Nummer, Sozialversicherungsnummer

- Spanien: NIF, NIE, CIF

- Italien: Steuernummer, Führerschein, Umsatzsteuer-ID

- Polen: PESEL, NIP, REGON

- Singapur: NRIC/FIN, UEN

- Australien: ABN, ACN, TFN, Medicare

- Indien: Aadhaar, PAN, Reisepass, Wählernummer

- Modus:

- Erkennen: Nur PII identifizieren (Standard)

- Maskieren: Erkannte PII durch maskierte Werte ersetzen

- Sprache: Erkennungssprache (Standard: Englisch)

Ausgabe:

passed:falsewenn ausgewählte PII-Typen erkannt werdendetectedEntities: Array erkannter PII mit Typ, Position und KonfidenzmaskedText: Inhalt mit maskierter PII (nur wenn Modus = "Mask")error: Fehlermeldung bei fehlgeschlagener Validierung

Anwendungsfälle:

- Blockieren von Inhalten mit sensiblen persönlichen Informationen

- Maskieren von personenbezogenen Daten vor der Protokollierung oder Speicherung

- Einhaltung der DSGVO, HIPAA und anderer Datenschutzbestimmungen

- Bereinigung von Benutzereingaben vor der Verarbeitung

Konfiguration

Zu validierende Inhalte

Der zu validierende Eingabeinhalt. Dieser stammt typischerweise aus:

- Ausgaben von Agent-Blöcken:

<agent.content> - Ergebnisse von Funktionsblöcken:

<function.output> - API-Antworten:

<api.output> - Jede andere Blockausgabe

Validierungstyp

Wählen Sie aus vier Validierungstypen:

- Gültiges JSON: Prüfen, ob der Inhalt korrekt formatiertes JSON ist

- Regex-Übereinstimmung: Überprüfen, ob der Inhalt einem Regex-Muster entspricht

- Halluzinationsprüfung: Validierung gegen Wissensdatenbank mit LLM-Bewertung

- PII-Erkennung: Erkennung und optional Maskierung personenbezogener Daten

Ausgaben

Alle Validierungstypen geben zurück:

<guardrails.passed>: Boolescher Wert, der angibt, ob die Validierung bestanden wurde<guardrails.validationType>: Der durchgeführte Validierungstyp<guardrails.input>: Die ursprüngliche Eingabe, die validiert wurde<guardrails.error>: Fehlermeldung, wenn die Validierung fehlgeschlagen ist (optional)

Zusätzliche Ausgaben nach Typ:

Halluzinationsprüfung:

<guardrails.score>: Konfidenzwert (0-10)<guardrails.reasoning>: Erklärung des LLM

PII-Erkennung:

<guardrails.detectedEntities>: Array erkannter PII-Entitäten<guardrails.maskedText>: Inhalt mit maskierten PII (wenn Modus = "Mask")

Beispielanwendungsfälle

JSON vor dem Parsen validieren - Stellen Sie sicher, dass die Agent-Ausgabe gültiges JSON ist

Agent (Generate) → Guardrails (Validate) → Condition (Check passed) → Function (Parse)Halluzinationen verhindern - Validieren Sie Kundendienstantworten anhand der Wissensdatenbank

Agent (Response) → Guardrails (Check KB) → Condition (Score ≥ 3) → Send or FlagPII in Benutzereingaben blockieren - Bereinigen Sie von Benutzern übermittelte Inhalte

Input → Guardrails (Detect PII) → Condition (No PII) → Process or RejectBewährte Praktiken

- Verkettung mit Bedingungsblöcken: Verwenden Sie

<guardrails.passed>, um die Workflow-Logik basierend auf Validierungsergebnissen zu verzweigen - JSON-Validierung vor dem Parsen verwenden: Validieren Sie immer die JSON-Struktur, bevor Sie versuchen, LLM-Ausgaben zu parsen

- Geeignete PII-Typen auswählen: Wählen Sie nur die für Ihren Anwendungsfall relevanten PII-Entitätstypen für bessere Leistung

- Angemessene Konfidenzgrenzwerte festlegen: Passen Sie für die Halluzinationserkennung den Grenzwert an Ihre Genauigkeitsanforderungen an (höher = strenger)

- Starke Modelle für die Halluzinationserkennung verwenden: GPT-4o oder Claude 3.7 Sonnet bieten genauere Konfidenzwerte

- PII für die Protokollierung maskieren: Verwenden Sie den Modus "Mask", wenn Sie Inhalte protokollieren oder speichern müssen, die PII enthalten könnten

- Regex-Muster testen: Validieren Sie Ihre Regex-Muster gründlich, bevor Sie sie in der Produktion einsetzen

- Validierungsfehler überwachen: Verfolgen Sie

<guardrails.error>Nachrichten, um häufige Validierungsprobleme zu identifizieren

Die Validierung von Guardrails erfolgt synchron in Ihrem Workflow. Für die Erkennung von Halluzinationen sollten Sie schnellere Modelle (wie GPT-4o-mini) wählen, wenn die Latenz kritisch ist.